Agents for Question Answering

Overview

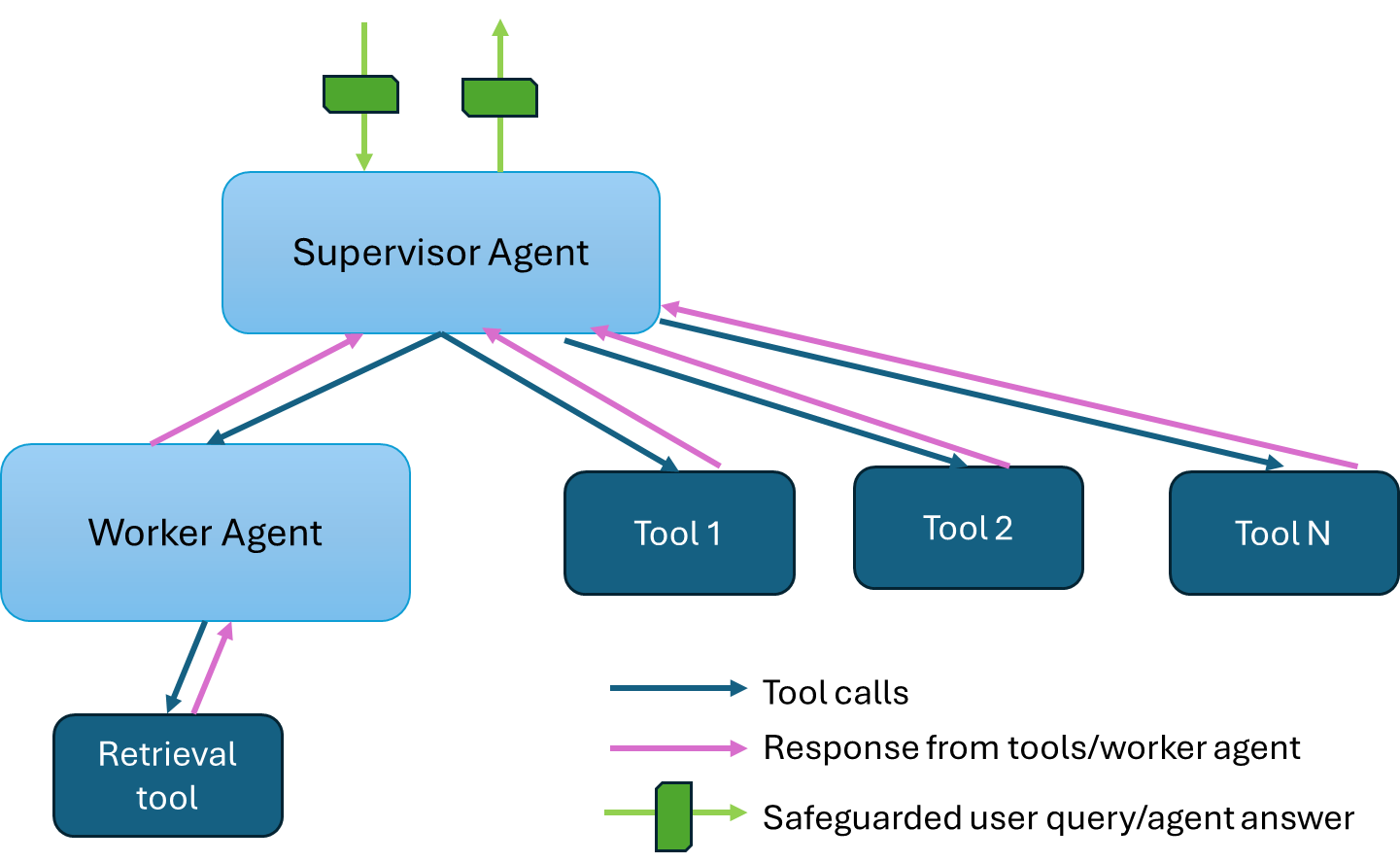

This example showcases a hierarchical multi-agent system for question-answering applications. The architecture diagram is shown below. The supervisor agent interfaces with the user and dispatch tasks to the worker agent and other tools to gather information and come up with answers. The worker agent uses the retrieval tool to generate answers to the queries posted by the supervisor agent. Other tools used by the supervisor agent may include APIs to interface knowledge graphs, SQL databases, external knowledge bases, etc.

The AgentQnA example is implemented using the component-level microservices defined in GenAIComps. The flow chart below shows the information flow between different microservices for this example.

---

config:

flowchart:

nodeSpacing: 400

rankSpacing: 100

curve: linear

themeVariables:

fontSize: 50px

---

flowchart LR

%% Colors %%

classDef blue fill:#ADD8E6,stroke:#ADD8E6,stroke-width:2px,fill-opacity:0.5

classDef orange fill:#FBAA60,stroke:#ADD8E6,stroke-width:2px,fill-opacity:0.5

classDef orchid fill:#C26DBC,stroke:#ADD8E6,stroke-width:2px,fill-opacity:0.5

classDef invisible fill:transparent,stroke:transparent;

%% Subgraphs %%

subgraph DocIndexRetriever-MegaService["DocIndexRetriever MegaService "]

direction LR

EM([Embedding MicroService]):::blue

RET([Retrieval MicroService]):::blue

RER([Rerank MicroService]):::blue

end

subgraph UserInput[" User Input "]

direction LR

a([User Input Query]):::orchid

Ingest([Ingest data]):::orchid

end

AG_REACT([Agent MicroService - react]):::blue

AG_RAG([Agent MicroService - rag]):::blue

LLM_gen{{LLM Service <br>}}

DP([Data Preparation MicroService]):::blue

TEI_RER{{Reranking service<br>}}

TEI_EM{{Embedding service <br>}}

VDB{{Vector DB<br><br>}}

R_RET{{Retriever service <br>}}

%% Questions interaction

direction LR

a[User Input Query] --> AG_REACT

AG_REACT --> AG_RAG

AG_RAG --> DocIndexRetriever-MegaService

EM ==> RET

RET ==> RER

Ingest[Ingest data] --> DP

%% Embedding service flow

direction LR

AG_RAG <-.-> LLM_gen

AG_REACT <-.-> LLM_gen

EM <-.-> TEI_EM

RET <-.-> R_RET

RER <-.-> TEI_RER

direction TB

%% Vector DB interaction

R_RET <-.-> VDB

DP <-.-> VDB

Why Agent for question answering?

- Improve relevancy of retrieved context. Agent can rephrase user queries, decompose user queries, and iterate to get the most relevant context for answering user's questions. Compared to conventional RAG, RAG agent can significantly improve the correctness and relevancy of the answer.

- Use tools to get additional knowledge. For example, knowledge graphs and SQL databases can be exposed as APIs for Agents to gather knowledge that may be missing in the retrieval vector database.

- Hierarchical agent can further improve performance. Expert worker agents, such as retrieval agent, knowledge graph agent, SQL agent, etc., can provide high-quality output for different aspects of a complex query, and the supervisor agent can aggregate the information together to provide a comprehensive answer.

Roadmap

- v0.9: Worker agent uses open-source websearch tool (duckduckgo), agents use OpenAI GPT-4o-mini as llm backend.

- v1.0: Worker agent uses OPEA retrieval megaservice as tool.

- v1.0 or later: agents use open-source llm backend.

- v1.1 or later: add safeguards

Getting started

-

Build agent docker image

First, clone the opea GenAIComps repoexport WORKDIR=<your-work-directory> cd $WORKDIR git clone https://github.com/opea-project/GenAIComps.gitThen build the agent docker image. Both the supervisor agent and the worker agent will use the same docker image, but when we launch the two agents we will specify different strategies and register different tools.

cd GenAIComps docker build -t opea/agent-langchain:latest --build-arg https_proxy=$https_proxy --build-arg http_proxy=$http_proxy -f comps/agent/langchain/Dockerfile . -

Launch tool services

In this example, we will use some of the mock APIs provided in the Meta CRAG KDD Challenge to demonstrate the benefits of gaining additional context from mock knowledge graphs.docker run -d -p=8080:8000 docker.io/aicrowd/kdd-cup-24-crag-mock-api:v0 -

Set up environment for this example

First, clone this repocd $WORKDIR git clone https://github.com/opea-project/GenAIExamples.gitSecond, set up env vars

export TOOLSET_PATH=$WORKDIR/GenAIExamples/AgentQnA/tools/ # optional: OPANAI_API_KEY export OPENAI_API_KEY=<your-openai-key> -

Launch agent services

The configurations of the supervisor agent and the worker agent are defined in the docker-compose yaml file. We currently use openAI GPT-4o-mini as LLM, and we plan to add support for llama3.1-70B-instruct (served by TGI-Gaudi) in a subsequent release. To use openai llm, run command below.cd docker_compose/intel/cpu/xeon bash launch_agent_service_openai.sh

Validate services

First look at logs of the agent docker containers:

docker logs docgrader-agent-endpoint

docker logs react-agent-endpoint

You should see something like "HTTP server setup successful" if the docker containers are started successfully.

Second, validate worker agent:

curl http://${ip_address}:9095/v1/chat/completions -X POST -H "Content-Type: application/json" -d '{

"query": "Most recent album by Taylor Swift"

}'

Third, validate supervisor agent:

curl http://${ip_address}:9090/v1/chat/completions -X POST -H "Content-Type: application/json" -d '{

"query": "Most recent album by Taylor Swift"

}'

How to register your own tools with agent

You can take a look at the tools yaml and python files in this example. For more details, please refer to the "Provide your own tools" section in the instructions here.